When we last talked about Big Data, we talked about different Big Data Analytics Techniques. Prior to that we have talked about different aspects of Big Data. In one of my blogs, I described the “Functionalities of Big Data Reference Architecture Layers”. As said before, continuing along the same lines, in this blog we will discuss about “Top 10 Open Source Data Extraction Tools”.

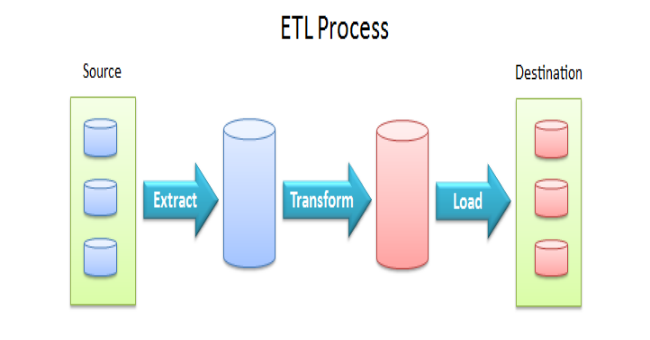

Data Extraction Tools of big data help in collecting the data from all the different sources and transforms it in a structured form. The more commonly used term for these tools is “ETL – Extract Transform and Load”. The functionalities of these tools could be divided in below described 3 phases:

- Extract Data from homogeneous or heterogeneous data sources

- Transform the data for storing it in proper format or structure for querying and analysis purpose.

- Load it in the final target (database, more specifically, operational data store, data mart, or data warehouse).

Usually in ETL tools, all the three phases execute in parallel since the data extraction takes time, so while the data is being pulled another transformation process executes, processing the already received data and prepares the data for loading and as soon as there is some data ready to be loaded into the target, the data loading kicks off without waiting for the completion of the previous phases.

Here, I am listing top 10 open source Data Extraction or ETL tools:

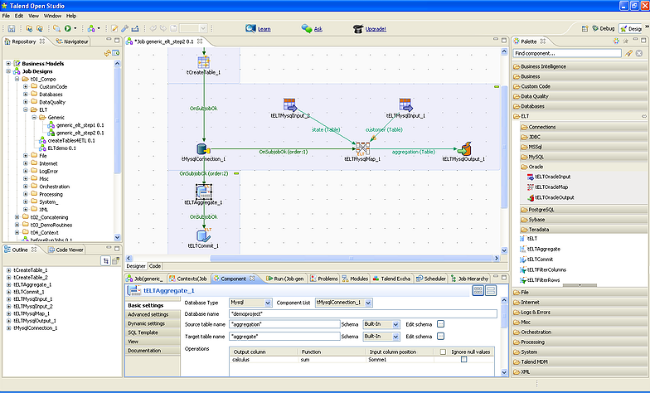

1. Talend Open Studio:

Talend Openstudio is one of the most powerful data Integration ETL tool in the market. Talend Open Studio is a versatile set of open source products for developing, testing, deploying and administrating data management and application integration projects.

For ETL projects, Talend Open Studio for Data Integration provides multiple solutions for data integration, both open source and commercial editions. Talend offers a rich feature set including a graphical integrated development environment with an intuitive Eclipse-based interface. With drag-and-drop design flow, and broad connectivity with more than 400 pre-configured application connectors to bridge between databases, mainframes, file systems, web-services, packaged enterprise applications, data warehouses, OLAP applications, Software-as-a-Service, Cloud-based applications, and more.

2. Scriptella:

Scriptella is an open source ETL tool launched by Apache, which along with the functioning of the extracting, transforming as well as loading processes is also used in the executing java scripting. It is a very simple and easy tool for use and it is basically popular due to its ease of use. The features include executing scripts written in SQL, JavaScript, JEXL, Velocity. Database migration, interoperability with LDAP, JDBC, XML and other data sources. Cros Database ETL operations, import/export from/to CSV, text and XML and other formats.

3. KETL:

KETL is one of the best open source tools for data warehousing. It is made of java oriented structure along with XML and other languages. The engine is built upon an open, multi-threaded, XML-based architecture. KETL major features include support for integration of security and data management tools, proven scalability across multiple servers and CPU’s and any volume of data and no additional need for third party schedule, dependency, and notification tools.

4. Pentaho Data Integrator – Kettle:

According to Pentaho itself, it is a BI provider that offers ETL tools as a capability of data integration. These ETL capabilities are based on the Kettle project. It is Java application and Library. Kettle is an interpreter of procedures written in XML format. Kettle provides a Java Script engine to fine tune the data manipulation process. Kettle is also a good tool, with everything necessary to build even complex ETL procedures. Kettle is an interpreter of ETL procedures written in XML format.

Kettle (PDI) is the default tool in Pentaho Business Intelligence Suite. The procedures can be also executed outside the Pentaho platform, provided that all the Kettle Libraries and Java interpreter are installed.

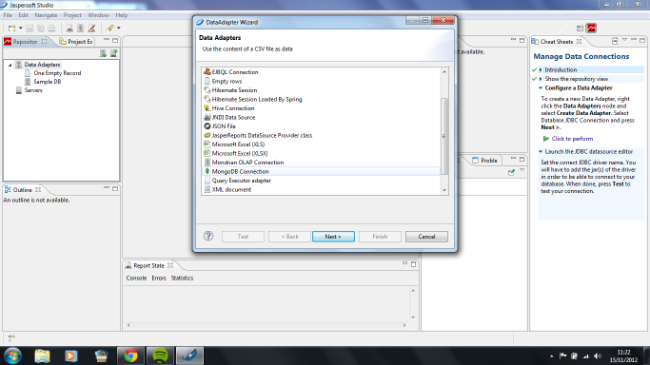

5. Jaspersoft ETL:

Jaspersoft ETL is easy to deploy and out-performs many proprietary and open source ETL systems. It is used to extract data from your transactional system to create a consolidated data warehouse or data mart for reporting and analysis. Features include business modeler to access a non-technical view of the information workflow, display and edit the ETL process with Job Designer, a graphical editing tool, define complex mappings and transformations with Transformation Mapper and other transformation components.

It even has the ability to track ETL Statistics from start to finish with real time debugging, allow simultaneous output from and input to multiple sources including flat files, XML files, databases, web services, POP and FTP servers with hundreds of available connectors and use of the activity monitoring console to monitor job events, execution times, and data volumes.

6. GeoKettle:

GeoKettle is a spatially-enabled version of the generic ETL tool Kettle (Pentaho Data Integration). GeoKettle is a powerful metadata-driven Spatial ETL Tool dedicated to the integration of different spatial data sources for building and updating geospatial data warehouses.

It enables the Extraction of data from data sources, the Transformation of Data in order to correct errors, make some data cleansing, change the data structure, make them compliant to defined standards, and the Loading of transformed data into a target Database Management System (DBMS) in OLTP or OLAP/SOLAP mode, GIS file or Geospatial Web Service.

7. Clover ETL:

This project is directed by OpenSys, a based in Czech Republic company. It is Java-based, dual-licensed open source that in its commercially licensed version offers warranty and support. In its offer there is a small footprint that makes it easy to embed by system integrators and ISVs. It aims at creating a basic library of functions, including mapping and transformations. Its enterprise server edition is a commercial offering.

8. HPCC Systems:

HPCC Systems is an Open-source platform for Big Data analysis with a Data Refinery engine called Thor. Thor clean, link, transform and analyze Big Data. Thor supports ETL (Extraction, Transformation and Loading) functions like ingesting unstructured/structured data out, data profiling, data hygiene, and data linking out of the box. The Thor processed data can be accessed by a large number of users concurrently in real time fashion using the Roxie, which is a Data Delivery engine. Roxie provides highly concurrent and low latency real time query capability.

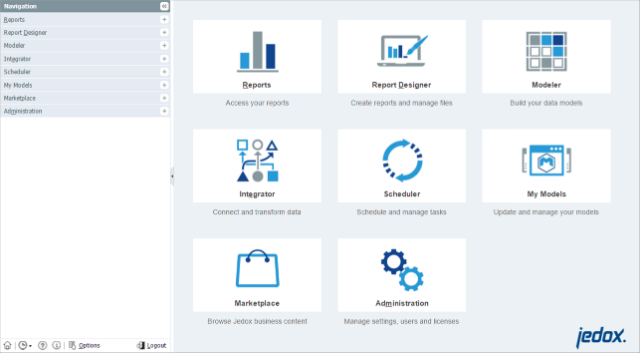

9. Jedox:

Jedox is the Open Source BI solution tool. This particular tool is for managing the performance holding strategy plan, investigation, coverage and the processes involved in the ETL concepts. The Open Core consist of an in-memory OLAP Server, ETL Server and OLAP client libraries. Powerfully supporting Jedox OLAP server as a source and target system, tool is prepared with the capability of overcoming the complications in the OLAP investigation. Any conventional model can be transformed into an OLAP model by the use of this particular ETL tool.

Working with cubes and dimensions couldn’t be easier. Flexibly generate frequently-needed time hierarchies and efficiently transform the relational model of source systems into an OLAP model – with JEDOX ETL.

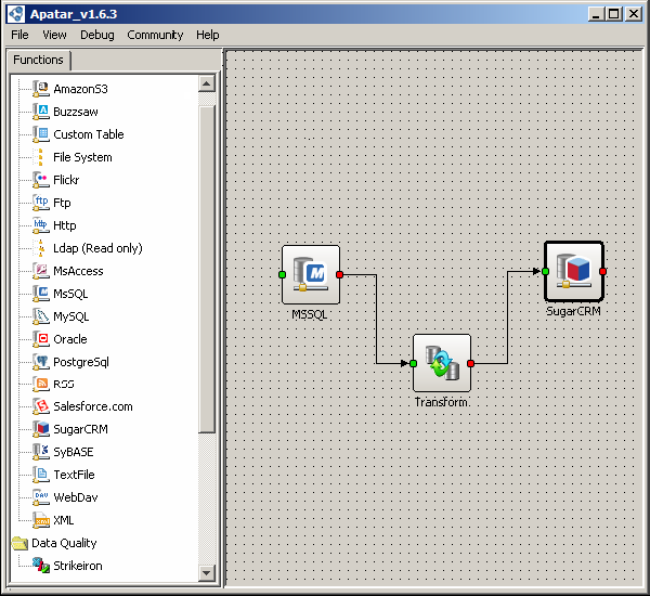

10. Apatar ETL:

Apatar ETL brings a set of unmatched capabilities in an open source package. Features include connectivity to Oracle, MS SQL, MySQL, Sybase DB2, MS Access, PostgreSQL, XML, InstantDB, Paradox, BorlandJDataStore, Csv, MS Excel, Qed, HSQL, SalesForce.Com etc. There is a single interface to manage all integration projects, flexible deployment options, bi-directional integration, platform-independent, runs from Windows, Linux, Mac; 100% Java-based, no coding, visual job designer and mapping enable non-developers to design and perform transformations.

Open Source Tools always have some limitations whether any, limitations in terms of advanced features, storage facility, advanced analytic features and much more. So, it is better advised to use licensed tools. My next blog would discuss about the Licensed Data Extraction Tools.

Subscribe Now & Never Miss The Latest Tech Updates!

Subscribe Now & Never Miss The Latest Tech Updates!